Key Takeaways:

- AI search tools like ChatGPT, Gemini, and Claude are gradually replacing Google (and Bing, etc.) as the primary research discovery method, and most journals are invisible to them.

- SEO has evolved from keywords to EEAT to entity-based AI visibility – journals need to adapt their approach, not abandon what worked before.

- Small and mid-sized publishers that don’t adapt risk falling far behind major publishers with large budgets and technical teams.

- 5 content fixes can boost AI visibility immediately – detailed below.

- A complete content strategy, borrowing from B2B, can get your journal caught up in about half a year.

Your journal isn’t showing up when researchers ask AI where to publish. ChatGPT, Gemini, Claude, and DeepSeek don’t recognize your journal because your website doesn’t clearly explain what you publish, who should submit, and why.

When researchers ask “Where can I publish research on coral reef ecology?”, your journal doesn’t appear. When they search for journals in marine biology, you’re not mentioned. You’re partly or fully invisible to the tools researchers now use to find publication venues.

Meanwhile, AI tools readily recommend PLOS ONE, Elsevier journals, and Springer Nature titles.

The problem isn’t just about the content; it’s about how you present your journal online.

Contents

How SEO evolved (and why that matters now)

For two decades, SEO evolved through distinct phases. Understanding this progression explains why most journals are struggling now.

Phase 1: Keyword SEO (2000-2015) Early SEO was simple. You identified the terms researchers searched for. You sprinkled them throughout your titles and abstracts. You built backlinks from other academic sites. Google rewarded you with rankings. This was purely about matching words and counting links.

Phase 2: EEAT SEO (2015-2024) Google got smarter. It developed EEAT (Experience, Expertise, Authoritativeness, Trustworthiness) as its quality framework. Keywords still mattered, but Google started asking: Does this author have credentials? Is the website authoritative? Can we trust this information? For academic journals, this meant author bios, institutional affiliations, and peer review processes became ranking signals.

Phase 3: AI-SEO/GEO (2024-present) AI models operate differently than Google ever did. They don’t just evaluate individual pages. They build comprehensive portraits of brands through entity recognition. They ask: Is this journal mentioned consistently across authoritative platforms? Do researchers cite it? Does it appear in academic discussions on Reddit, LinkedIn, and discipline-specific forums? Is the brand entity clear and consistent everywhere?

Recent analysis shows that brand mentions, entity consistency, and cross-platform credibility now determine AI visibility more than any single on-page optimization. When ChatGPT decides which journals to cite, it evaluates your entire digital footprint. Your presence on Google Scholar. Mentions in news articles. Discussions in academic communities. Consistency of your brand signals across platforms.

Think of Google as evaluating individual articles. AI models evaluate your journal as an entity with a reputation.

Recent analysis of 680 million AI citations from August 2024 to June 2025 reveals the shift. ChatGPT cites Wikipedia at nearly 8% of total citations. That makes Wikipedia the single most cited source. Among Google AI Overviews’ top sources, Reddit appears at 2.2%. Commercial (.com) domains dominate with over 80% of citations across platforms.

Where do academic journals fit? Barely. Unless you’re publishing with a major player that has invested heavily in building its brand entity and cross-platform presence, you’re getting sidelined.

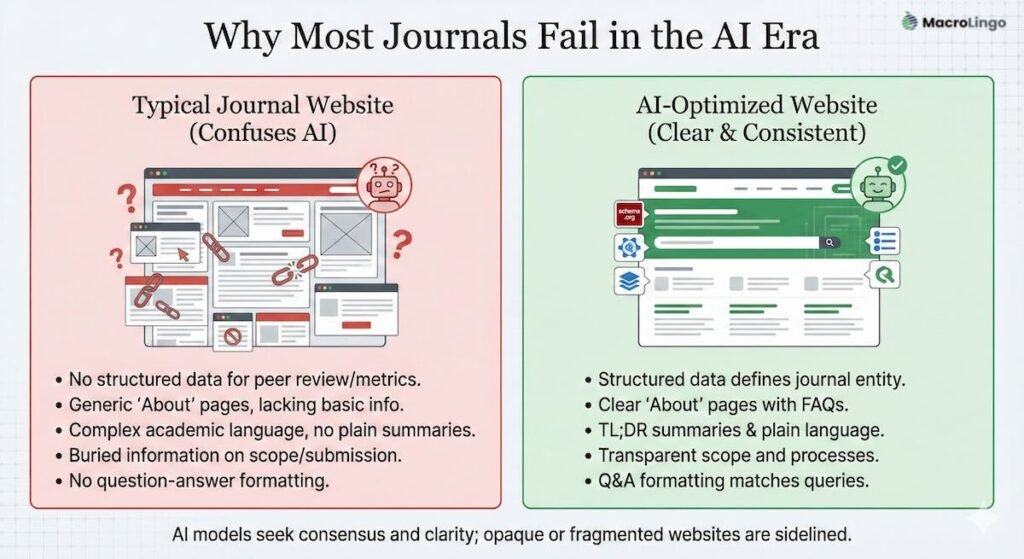

Most journals fail here. The journal’s content excels, but the website presentation confuses AI. Consider what an AI model encounters when trying to understand a typical journal website:

- No structured data telling it “This is a peer-reviewed journal that publishes X topics, with Y review time, and Z acceptance rate”

- Generic “About” pages that don’t answer basic questions like “What do you publish?” or “Who should submit here?”

- Complex academic language with no plain summaries

- Buried information about scope, review process, and submission guidelines

- No question-answer formatting that matches how researchers actually query information

The result? The AI model moves on. It finds journal websites that communicate clearly, even if they’re publishing lower-quality research. A well-structured website from a newer journal might get recommended over your established publication simply because its site had clear sections, TL;DR summaries, and answered researcher questions directly.

Look at which journals AI models recommend. Nature and Science appear regularly because each has dedicated website teams and clear digital presence. PLOS ONE shows up consistently because its website clearly explains its scope, review process, and benefits. Journal of Medical Internet Research, while from a mid-sized publisher, ranks well because its site is organized around researcher questions.

Even smaller journals like eLife and PeerJ outperform traditional publishers because each built websites optimized for clarity from day one.

AI models seek consensus and clarity. They want sources that align with other trusted sources and present information unambiguously. If your science communication is opaque or fragmented across different pages, AI can’t use you to build its recommendations.

The fixes aren’t usually complicated if you have a solid website and marketing strategy. They just require thinking about your website from an AI model’s perspective instead of a print publisher’s perspective. If your marketing has been more gut-driven, referral-driven, or non-existent then, yes, you’ve got some more work to do.

Which journals AI models already trust, and why

Let’s examine which journals appear in AI-generated recommendations and what each is doing right.

PLOS ONE dominates recommendations in fields like medicine and biology. Why? Its website clearly states its scope, review process, and author benefits. The journal’s About page includes plain-language summaries of what it publishes. Its Author Guidelines are structured as FAQs. When ChatGPT is asked “Where can I publish medical research?”, PLOS ONE provides clear, parseable answers about its focus and process.

Nature and Science maintain their elite status in AI recommendations. Each journal’s investment in its website shows. Clear editorial policies. Detailed submission guidelines organized by question. Rich information about editorial boards and review processes. Both journals have dedicated teams ensuring their websites communicate effectively with AI. And they have huge budgets, strategy, and prestige, too – that helps.

eLife punches above its weight in AI visibility. As an open-access journal born digital, it built its website around clarity from day one. Its Aims & Scope answers “What do you publish?” Its submission page answers “How do I submit?” Its About page answers “Why publish here?”

BMJ (publisher of the British Medical Journal and other titles) structures its journal websites with researchers’ questions in mind. Each journal has comprehensive FAQs. Clear descriptions of review processes. Plain-language explanations of author benefits.

Frontiers journals show up consistently because the publisher standardized website structure across its entire platform. Every journal, regardless of discipline, presents information the same clear way. AI models can understand a Frontiers journal in neuroscience as easily as one in environmental science.

Meanwhile, hundreds of respected society journals remain invisible. Each journal publishes excellent research but presents itself poorly on its website. A small academic society publishing in molecular biology might have better science than some Nature articles, but if its website doesn’t clearly explain what it publishes, who should submit, and why, ChatGPT will never recommend it.

The gap is widening every month. As AI models refine their understanding of the scholarly ecosystem, they’re developing preferences. Publishers that invested in clear, structured websites early are becoming “preferred recommendations.” Everyone else is getting filtered out.

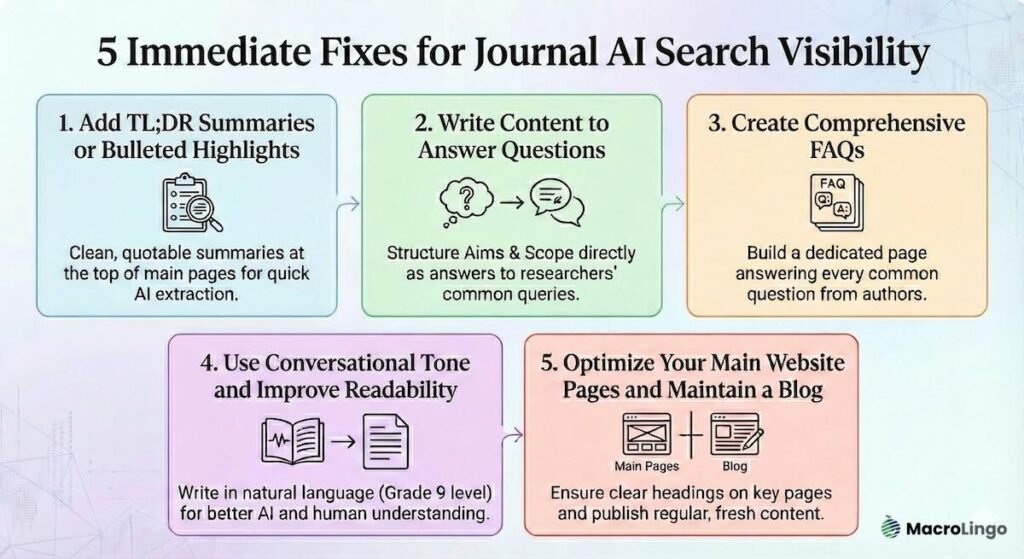

5 immediate content fixes for AI visibility 👀

These aren’t theoretical suggestions. These are content changes you can implement right now that will make your journal visible to ChatGPT, Gemini, Claude, and DeepSeek.

1. Add TL;DR summaries or bulleted highlights

ChatGPT doesn’t want to parse dense paragraphs to extract information about your journal. It wants a clean, quotable summary it can trust.

When someone asks “Where can I publish marine biology research?”, ChatGPT can pull from your journal’s About page TL;DR: “The Journal of Marine Conservation publishes peer-reviewed research on ocean ecosystems, with an average 28-day review time and open access options available.”

Add a “Summary” or “Highlights” section at the top of your journal’s main pages (About, Aims & Scope, Author Guidelines) and every blog post. Use bullet points. Avoid jargon. Think of them like a plain language summary (PLS) – spliced into soundbites (see the top of this post).

Example for a journal About page:

Highlights:

- Peer-reviewed open-access journal publishing marine conservation research since 2015

- Average time from submission to first decision: 28 days

- Indexed in Web of Science, Scopus, and PubMed

- No article processing charges for authors from qualifying institutions

- Published by the Marine Conservation Society in partnership with Oxford University Press

Example for a blog post announcing new editorial board members:

Summary:

- Three new associate editors join our team, bringing expertise in coral reef ecology, fisheries management, and marine policy

- Dr. Sarah Chen (University of Sydney) will handle coral reef submissions

- Dr. James Martinez (Woods Hole) will oversee fisheries research

- Dr. Amara Okafor (University of Lagos) will manage policy and governance papers

The impact: AI models can extract information about your journal instantly. When researchers ask where to publish or what your review process looks like, ChatGPT cites you directly because you’ve done the summarization work.

Implementation difficulty: Easy. This requires updating your journal’s website pages and establishing a practice for blog posts. Add summaries to your About page, Aims & Scope, and Author Guidelines pages today. Create a simple template for blog post summaries. Your web team or content manager can handle this.

2. Write content to answer questions

Structure your journal’s main pages (Aims & Scope, About, Editorial Board) and blog content to directly answer the questions researchers actually ask.

When someone asks Claude “Where can I publish research on rare earth mineral extraction methods?”, Claude scans journal Aims & Scope pages. If your page is written as a statement (“We publish papers on materials science”), Claude might miss you. If written as an answer (“Looking for a journal for rare earth mineral research? We publish…”), you’ll match the query.

Rewrite your Aims & Scope page in question-answer format:

Where can I publish marine conservation research? The Journal of Marine Conservation accepts peer-reviewed studies on ocean ecosystems, marine biodiversity, and evidence-based conservation strategies. We particularly welcome research on coral reefs, deep-sea ecosystems, fisheries management, and climate impact on marine life. Looking for fast publication? Our average time from submission to first decision is 28 days.

Then, use the same Q&A structure within your Author Guidelines. Don’t just write paragraphs – break information into question-answer blocks.

The impact: AI models can match your content to researcher queries directly. You appear in recommendations because your website speaks the same language researchers use when asking questions.

Implementation difficulty: Easy. This is a copywriting task. Spend 2 hours rewriting your Aims & Scope page with question-answer formatting. Apply the same approach to your About page, Editorial Board descriptions, and any blog content you create.

3. Create comprehensive FAQs

Build a dedicated FAQ page for your journal that answers every question authors and researchers ask about your publication.

Essential questions to include:

- What topics do you publish?

- What types of articles do you accept? (Original research, reviews, case studies, etc.)

- How long does peer review take at your journal?

- What are your article processing charges?

- Do you offer waivers or discounts for authors from certain countries?

- Can I submit a paper currently under review elsewhere?

- What’s your acceptance rate?

- How do I suggest a reviewer?

- What manuscript format do you require?

- Do you allow preprints?

For each question, provide a clear, direct answer. Link to relevant pages where appropriate, but don’t force users to click through to get basic information.

The impact: When someone asks ChatGPT “How does peer review work at the Journal of Marine Conservation?”, it can pull a direct, citable answer from your FAQ. You become the authoritative source for information about your own journal.

Implementation difficulty: Easy to moderate. This is content reorganization. Your editorial team can do this without technical help. Budget 4-6 hours to write a comprehensive FAQ.

4. Use conversational tone and improve readability

AI models prefer content written for humans in natural, conversational language. Your journal’s website content doesn’t have to sound like a legal document.

Write your About page, Aims & Scope, and Author Guidelines at about a Flesch-Kincaid Grade 9 reading level. You don’t have to be dogmatic about it, but it does make a good guidelines. Hemingway averaged a Grade 4, so you’re not dumbing it down. Even scholars appreciate a lighter cognitive load.

Use active voice. Break long sentences into shorter ones. Replace jargon with plain language where possible (or define it clearly when you can’t avoid it).

Think about how you’d explain your journal to a colleague at a conference. That’s the tone AI models understand best.

Example:

Before: “The journal facilitates the dissemination of scholarly discourse pertaining to marine ecosystem sustainability and conservation methodologies.”

After: “We publish research on ocean conservation and sustainable marine ecosystems.”

Both say the same thing. The second one gets understood and recommended by AI.

The impact: AI models can understand what your journal does and who should submit. Your website becomes more accessible to both researchers and machines.

Implementation difficulty: Moderate. This requires rewriting your journal’s main website pages with a clearer voice. Budget time for a copywriter who understands both academic publishing and plain language.

5. Optimize your main website pages and maintain a blog

Your journal’s homepage, About page, Aims & Scope, and Author Guidelines pages need to be optimized for AI discovery. But you also need fresh content that builds your brand entity across the web.

Main Page Optimization:

- Ensure every major page (Home, About, Aims & Scope, Editorial Board, Author Guidelines, FAQ) has clear H1, H2, and H3 headings

- Include contact information, social media links, and institutional affiliations

- Feature recent publications prominently with proper metadata

- Add editorial board member bios with credentials and ORCID links

Maintain an Active Blog: Start a blog featuring:

- Highlights of recently published research (with links to the full papers)

- Interviews with authors and editorial board members

- Commentary on field developments

- Behind-the-scenes looks at your peer review process

- Explainers on how to submit and what you’re looking for

Publishing blog content regularly (aim for 2-4 posts per month) serves multiple purposes:

- Gives AI models more content to understand your journal’s focus and authority

- Creates opportunities for other sites to link to you and mention your brand

- Provides shareable content for social media, building cross-platform presence

- Demonstrates that your journal is active and current (AI models favor recently updated sites)

The impact: Your journal develops a stronger brand entity. AI models recognize you as an active participant in your field, not just a repository. Your cross-platform presence grows. Other publications and researchers start mentioning you.

Implementation difficulty: Moderate to high. Requires consistent content creation commitment. Budget for either staff time or freelance writers who understand your field.

Why these 5 fixes work together

Each of these content fixes addresses a different aspect of how AI models understand and recommend journals:

TL;DR summaries give AI quick, quotable information it can extract without parsing thousands of words.

Question-based content matches the conversational queries researchers actually use when asking ChatGPT or Claude for information.

Comprehensive FAQs provide structured, reliable answers AI models trust and cite directly.

Conversational readability makes your content easier for both humans and machines to understand and extract.

Optimized pages plus blogging builds your brand entity across platforms, creating the consistent cross-platform presence AI models look for when evaluating trustworthiness.

Together, these changes transform how AI sees your journal – from a static website to an active, authoritative voice in your field.

The holistic solution: MacroLingo Academia Journal Experience (JX)

Those five fixes will improve your AI visibility. But each addresses symptoms, not the root problem.

Most journals weren’t designed for modern science communication. Each journal was designed for print. Even digital versions are just print replicas uploaded to the web.

AI-first publishing requires a complete rethinking of how journals operate. That’s where Journal Experience (JX) comes in.

JX is a systematic approach to building a journal that serves two audiences equally well: human researchers and machine crawlers.

For humans, this means:

- Clear author guidelines that reduce submission errors

- Fast, transparent peer review processes

- Accessible presentation of research findings

- Easy discoverability of relevant content

For machines, this means:

- Structured data on every page

- Clean HTML with semantic headings

- Plain-language summaries

- Question-answer formatting for common queries

- Global SEO practices (not local tactics that limit your reach)

The beauty of JX is that what’s good for AI is almost always good for humans too. When you write a TL;DR summary of your journal’s scope for ChatGPT, you’re also helping a busy researcher understand what you publish in 30 seconds. When you structure your author guidelines as a Q&A, you’re making life easier for a first-time submitter from a non-English-speaking country.

You could implement these five fixes with your internal team. But consider what you’ll miss:

- The content-technical intersection – Most web developers don’t understand academic publishing. Most editors don’t understand structured data. JX requires expertise in both.

- The author-facing changes – Improving AI visibility means rewriting your author guidelines, submission requirements, and editorial policies. That requires editorial and content strategy skills, not just IT knowledge.

- The ongoing maintenance – AI search evolves constantly. ChatGPT might prioritize different signals than Claude. Gemini might start favoring certain content types. You need someone tracking these changes.

This is where MacroLingo operates differently than typical SEO agencies or publishing consultants. We sit at the exact intersection of scientific publishing expertise and technical content strategy. We’re led by a 300+ times published academic in Dr. Gareth Dyke, and a Board-Certified Editor in the Life Sciences, and businessperson, Dr. Adam Goulston. We know the publishing business and the business business. We’ve also worked with major site and publishers like ResearchSquare, Springer Nature, Reviewer Credits, and Bentham Science.

We’ve implemented these exact strategies for our own B2B content – and we now appear in AI-generated responses for our target audiences. We’ve applied the same approach for scientific clients. We know what actually works because we’ve tested it.

An integrated JX approach (which we also presented in EditorsCafe) means we audit your entire journal presence – website structure, author guidelines, metadata, submission workflow – and rebuild it as a system that works for both researchers and AI models.

The global stakes

AI search is inherently global. When someone in São Paulo asks ChatGPT a scientific question, they get the same answer as someone in Stockholm or Singapore.

Your competition isn’t just other journals in your field. It’s every English-language source an AI model might trust. If you’re using local SEO practices – optimizing for regional search terms, focusing on geo-specific backlinks – you’re fighting the wrong battle.

AI models don’t care about geography. They care about authority, clarity, and structure. A small society journal in Poland can outrank a larger U.S. journal if it has clearer content and better structured information.

This levels the playing field in some ways. You don’t need a massive marketing budget to compete. But you do need to think globally. Your content needs to be accessible to researchers anywhere. Your technical infrastructure needs to meet the standards AI models expect from trusted sources worldwide.

This is another reason why working with content specialists who understand global academic communication matters. Many SEO providers will default to local tactics because that’s what they know. But journals operate in a global knowledge economy. Your visibility strategy needs to match that reality.

What happens without action

Academic publishing is splitting into two tiers right now.

Tier 1: Journals that AI models trust and recommend regularly. These journals appear in ChatGPT responses, Google AI Overviews, and research assistant tools. They attract strong submissions because authors know their work will be discovered. Their citation counts grow. Their impact factors rise.

Tier 2: Journals that AI models ignore. Their excellent research sits behind poorly designed websites. Authors start avoiding them because “nobody will find my work if I publish there.” Submissions decline. Impact factors stagnate. The editorial board starts questioning the journal’s future.The split is happening fast. Publishers are waking up to this crisis. But awareness isn’t enough. Action is.

Major publishers are already adapting. They have technical teams implementing structured data across thousands of pages. They’re rewriting content with AI visibility in mind. They’re investing in digital platforms optimized for machine reading.

Small and mid-sized publishers who delay risk falling further behind with each passing month. AI models are building their understanding of the scholarly ecosystem now. Researcher behavior is forming now. The earlier you adapt, the better positioned you’ll be.

Publishers who act now can establish themselves as recognized venues before this shift fully solidifies.

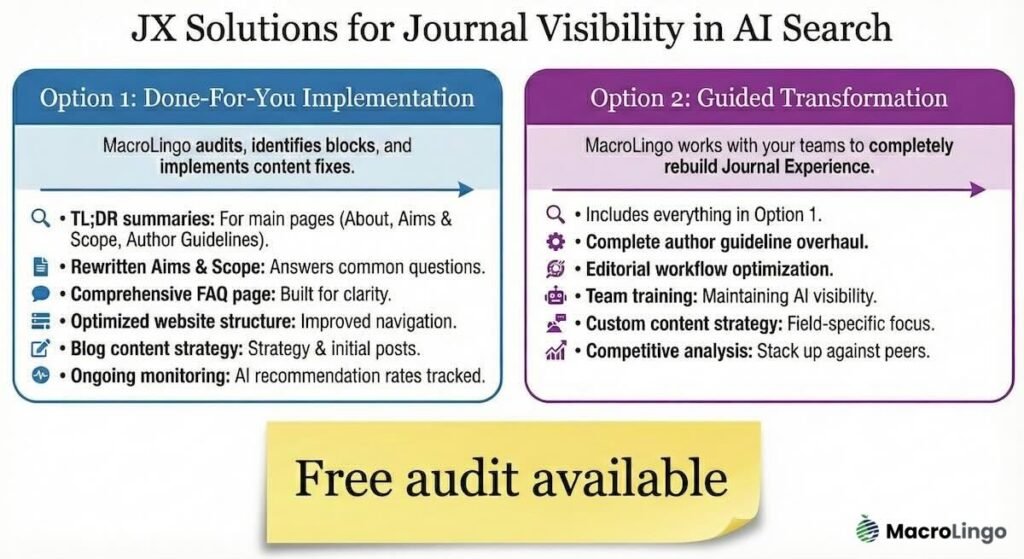

Take action: 2 paths forward

You have two options.

Option 1: Done-for-you implementation

MacroLingo audits your journal’s current web presence, identifies exactly what’s blocking AI visibility, and implements the content fixes. This includes:

- TL;DR summaries for your main pages (About, Aims & Scope, Author Guidelines)

- Rewritten Aims & Scope that answers common questions

- Comprehensive FAQ page

- Optimized website structure and navigation

- Blog content strategy and initial posts

- Ongoing monitoring of AI recommendation rates

Option 2: Guided transformation

MacroLingo works alongside your editorial and web teams to completely rebuild your Journal Experience. This includes everything in Option 1, plus:

- Complete author guideline overhaul

- Editorial workflow optimization

- Training for your team on maintaining AI visibility

- Custom content strategy for your specific field

- Competitive analysis showing how you stack up against peer journals

Both options start with a no-obligation audit. We analyze 10 representative pages from your journal and show you exactly where you’re losing AI visibility. You’ll get a detailed report you can use even if you decide to handle fixes internally.

AI search isn’t the future. It’s the present. Researchers are already using these tools. Your journal is already either visible or invisible to them.

The question isn’t whether to adapt. It’s whether to adapt now – while you can still capture this opportunity – or later, when you’re playing catch-up.

Frequently asked questions

Q: We’re a small society journal with no web developer on staff. Is this even feasible for us?

Yes. In fact, smaller journals often have an advantage because you can move faster than large publishers with bureaucratic approval processes. The five fixes outlined here don’t all require technical expertise. Fixes 1-4 (TL;DR summaries, question-based content, FAQs, conversational tone) are editorial and content tasks your team can handle today. Fix 5 (optimized pages and blogging) requires commitment but not necessarily technical skills. If you’re working with a publisher or platform provider, ask them directly: “Can you help us implement these content improvements?” Most modern journal platforms support the features you’ll need.

Q: Won’t AI-generated summaries and overviews just reduce our traffic? Why help AI tools when they might decrease our journal site visits?

When ChatGPT or Gemini provides an answer with citations, researchers still click through to verify, get more detail, and access full content – especially if they’re writing their own papers and need to cite properly. What AI Overviews actually replace is the “search results page.” Instead of clicking through 10 different Google results to find the right journal, researchers now get a synthesized answer with 3-4 sources cited. If you’re one of those cited sources, you win. If you’re not, you’ve already lost that traffic anyway. The choice isn’t between being cited by AI or getting organic traffic – rather, it’s between being cited by AI or being invisible entirely.

Q: We publish in a very specialized niche. Surely major AI models won’t care about our field?

Actually, specialized journals have an advantage here. AI models are increasingly used by researchers, students, and industry professionals who need deep expertise on niche topics. When someone asks Gemini, “What are the latest methods for dating ceramic artifacts using thermoluminescence?”, there might only be 2-3 journals publishing on that specific topic. If yours is the one with clear content, TL;DR summaries, and well-structured information, you’ll dominate those AI-generated answers. Specialized doesn’t mean invisible – it means you’re the obvious authority if you’re structured correctly. The real risk is thinking “we’re too small to matter” and ceding that authority to a competitor or a non-peer-reviewed source that happens to have better website structure.

Want to see where your journal stands? Contact MacroLingo for a free AI visibility audit. We’ll show you exactly what ChatGPT, Gemini, and Claude see when they look at your journal – and what you can do about it.